Chapter 03 Chapter 3: Understanding the GAN

The Intuition Behind GANs

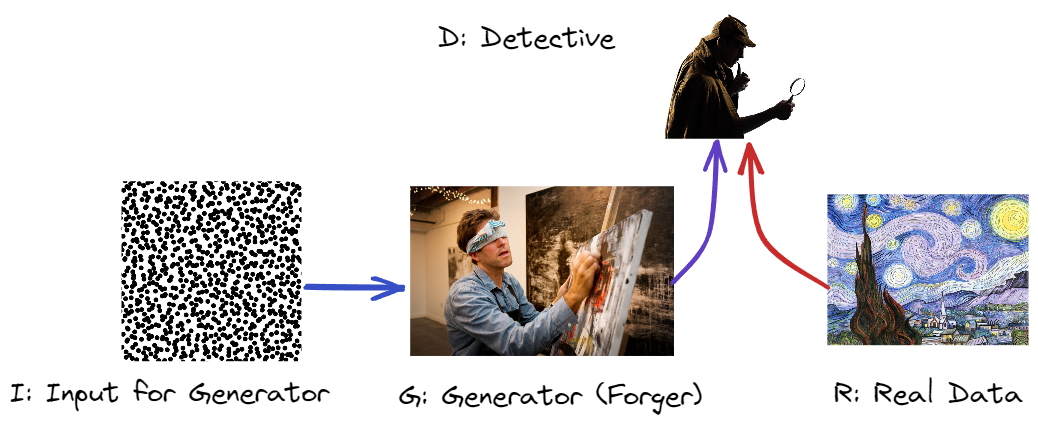

In a nutshell, Generative Adversarial Networks (GANs) work through a clever interplay between two neural networks: the Generator and the Discriminator. The Generator generates synthetic data, like images or music, aiming to create content that looks real. The Discriminator acts as a keen detective, trying to distinguish between real data and the fake creations of the Generator. As they play this adversarial game, both networks get better at their roles, with the Generator improving its ability to create realistic data, and the Discriminator becoming more adept at detecting fakes. This dynamic competition leads to the generation of remarkably realistic and creative outputs, making GANs a magical tool for AI-driven creativity.

- GAN Components: Generator and Discriminator

- GAN Training: The Adversarial Process

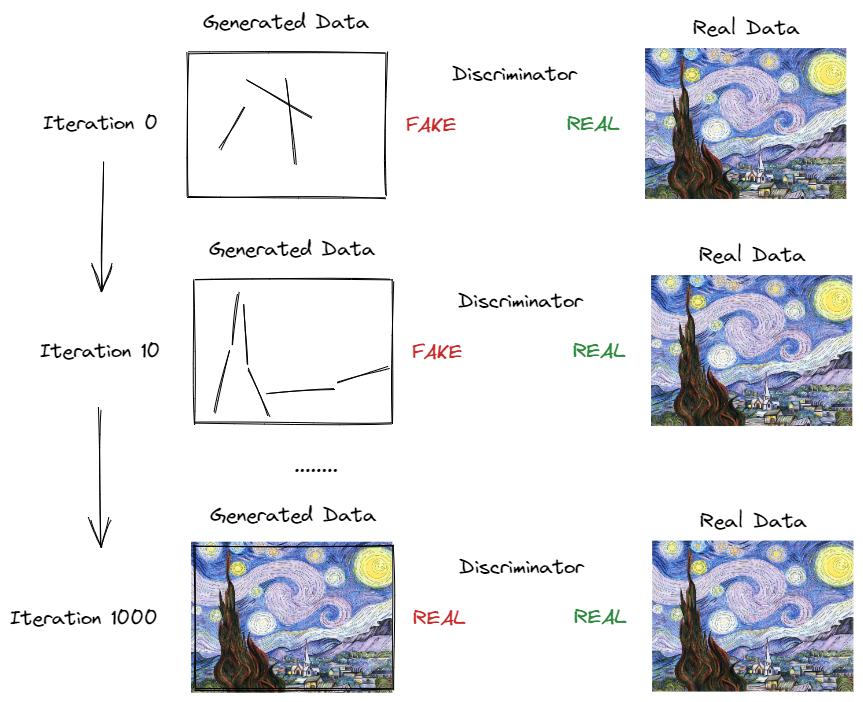

Training (Learning)

When training begins, the generator produces obviously poor (fake) data, and the discriminator quickly learns to identify that it's fake.

Common Types of GANs

As you'd expect, there are different types of GANs. Some of the main ones include:

- Vanilla GAN

- Conditional GAN (cGAN)

- Wasserstein GAN (WGAN)

- Progressive GAN (PGAN)

- StyleGAN and StyleGAN2

(but there are many many others in the literature StyleGAN, StackGAN, SRGAN, ESRGAN, …)

Let's take a look at some of the most popular GANs in more detail.

Vanilla GAN

A Vanilla GAN is the original and simplest form of Generative Adversarial Network, consisting of a Generator and a Discriminator, engaged in a game where the Generator generates data to fool the Discriminator, and the Discriminator aims to distinguish between real and fake data, resulting in the Generator progressively producing more realistic outputs.

Deep Convolutional GANs (DCGANS)

Instead of using fully connected layers, convolution layers are used, you modify the Vanilla GAN to a DCGAN through the following steps:

- Replace any pooling layers with strided convolutions(in the discriminator) and transposed convolutions (in the generator)

- Use Batch Normalization in both the generator and the discriminator, except in the generator's output layer and the discriminator's input layer

- Remove fully connected hidden layers for deep architectures

- Use ReLU activation in the generator for all layers except the output layer (which should be tanh)

- Use leaky ReLU activation in the discriminator for all layers

Conditional GAN (cGAN)

A Conditional GAN (cGAN) is an advanced type of Generative Adversarial Network that takes additional information (such as labels or class information) as input during the training process, enabling it to generate data based on specific conditions, resulting in more targeted and controllable output generation.

Wasserstein GAN (WGAN)

Wasserstein GAN (WGAN) is a variant of Generative Adversarial Network that introduces a different loss function, called the Wasserstein distance, to provide more stable training and better gradient flow, leading to improved training dynamics and higher quality output generation.

Progressive GAN (PGAN)

Progressive GAN (PGAN) is an advanced form of Generative Adversarial Network that starts with low-resolution images and progressively adds more layers to both the Generator and Discriminator during training, allowing it to generate high-quality, realistic images and achieve state-of-the-art results in image synthesis tasks.

StyleGAN and StyleGAN2

StyleGAN and StyleGAN2 are revolutionary Generative Adversarial Networks that introduce style-based architectures, allowing greater control over image generation by separating the generation process into style and structure, resulting in highly realistic and customizable images with improved details and better handling of diverse datasets.

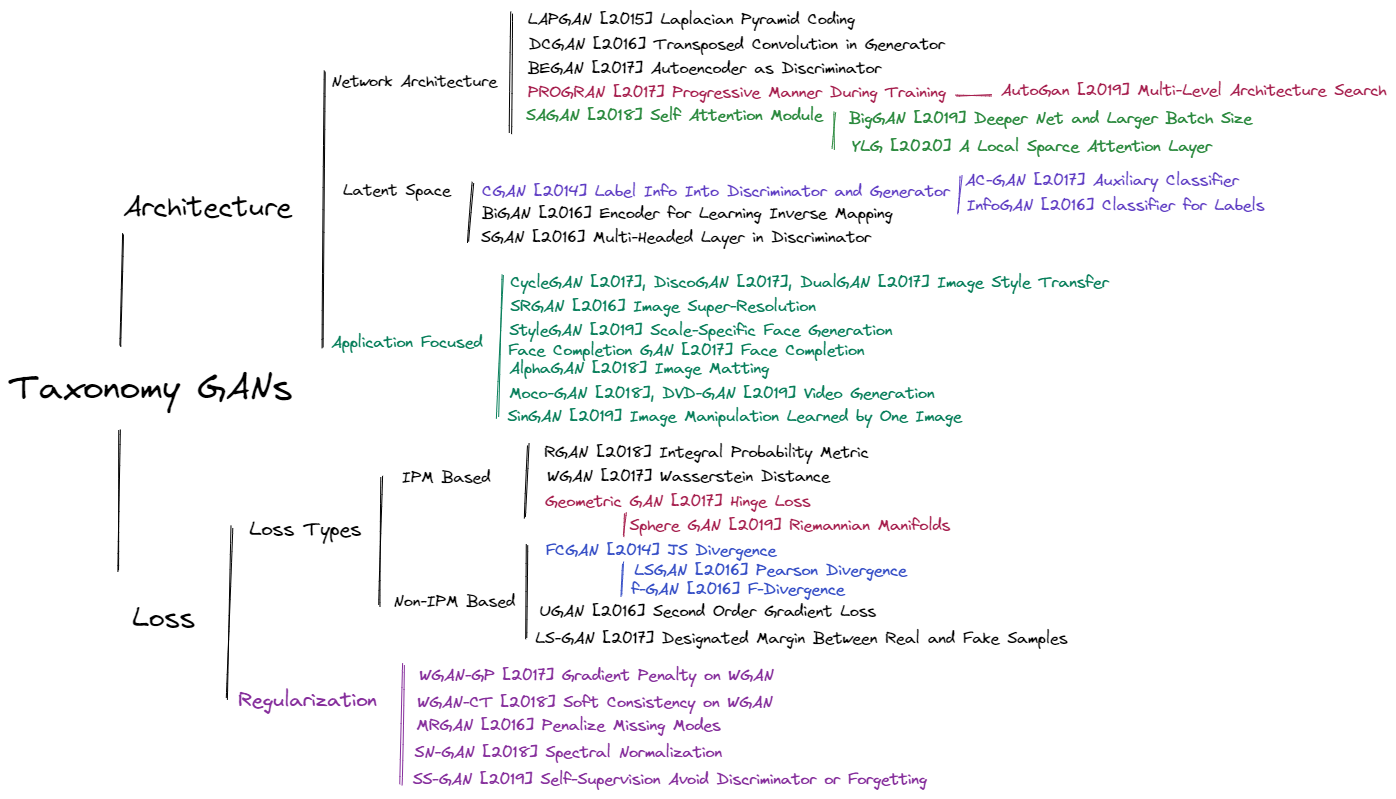

GAN Landscape

To try and see how all these different versions of GANs fit together, you can draw a taxonomy chart organized to represent the different areas based on recent publications.

Common Problems with GANs

While GANs have been a real game changer for content generation, the concept is still riddles with challenges and problems.

Vanishing Gradients

The discriminator doesn't provide enough information for the generator to make progress (modifications to the minmax loss to deal with vanishing gradients).

Mode Collapse

The generator starts to produce the same output (or a small set of outputs) over and over again. How can this happen?

Suppose the generator gets better at producing convincing results for a particular image or data. It will fool the discriminator a bit more with that selected result, and this will encourage it to produce even more images of that type. Gradually it will forget how to produce anything else (narrow).

GANS are very sensitive to hyperparameters

Typically you'll need to spend a lot of effort fine-tuning hyperparameters to get the results you desire.