Chapter 08 Chapter 8: Progressive GANS

Progressive Growing GAN is an extension to the GAN training process that allows for the stable training of generator models that can output large high-quality images.

It involves starting with a very small image and incrementally adding blocks of layers that increase the output size of the generator model and the input size of the discriminator model until the desired image size is achieved.

- GANs are effective at generating sharp images, although they are limited to small image sizes because of model stability.

- Progressive growing GAN is a stable approach to training GAN models to generate large high-quality images that involves incrementally increasing the size of the model during training.

- Progressive growing GAN models are capable of generating photorealistic synthetic faces and objects at high resolution that are remarkably realistic.

Generate Large Images by Progressively Adding Layers

A solution to the problem of training stable GAN models for larger images is to progressively increase the number of layers during the training process.

This approach is called Progressive Growing GAN, Progressive GAN, or PGGAN for short.

Progressive Growing GAN involves using a generator and discriminator model with the same general structure and starting with very small images, such as 4x4 pixels.

During training, new blocks of convolutional layers are systematically added to both the generator model and the discriminator models.

The incremental addition of the layers allows the models to effectively learn coarse-level detail and later learn ever finer detail, both on the generator and discriminator side.

How to Progressively Grow a GAN

Progressive growing GAN involves adding blocks of layers and phasing in the addition of the blocks of layers rather than adding them directly.

Important the new blocks are not just thrown in - they need to be faded in smoothly. We need to avoids sudden shocks to the already well-trained, smaller-resolution layers.

Let's look at a generator/discriminator that has a 16x16 image (want to add a new layer of 32x32).

Growing the Generator

Let's say we want to add a new block of convolutional layers that outputs a 32x32 image (currently only has a 16x16 output).

The output of this new layer is combined with the output of the 16x16 layer that is upsampled using nearest neighbor interpolation to 32x32. This is different from many GAN generators that use a transpose convolutional layer.

Growing the Discriminator

For the discriminator, this involves adding a new block of convolutional layers for the input of the model to support image sizes with 32x32 pixels.

The input image is downsampled to 16x16 using average pooling so that it can pass through the existing 16x16 convolutional layers. The output of the new 32x32 block of layers is also downsampled using average pooling so that it can be provided as input to the existing 16x6 block. This is different from most GAN models that use a 2x2 stride in the convolutional layers to downsample.

Examples

Synthetic Photographs of Celebrity Faces

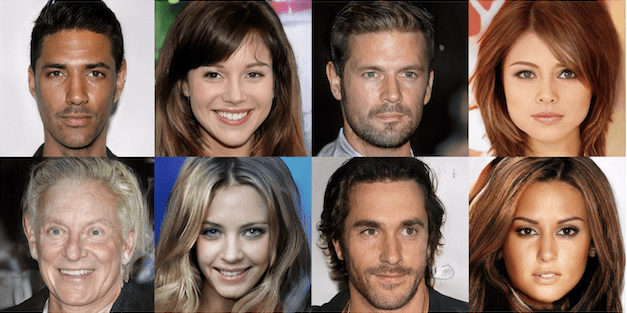

Perhaps the most impressive accomplishment of the Progressive Growing GAN is the generation of large 1024x1024 pixel photorealistic generated faces.

Synthetic Photographs of Celebrity Faces

Computational factor, to accomplish these high resolution photorealistic results took days! For example, 8 Tesla V100 GPUs for 4 days, after which there was no longer observed qualitative differences between the results of consecutive training iterations.

Synthetic Photographs of Objects

The model was also demonstrated on generating 256x256-pixel photorealistic synthetic objects from the LSUN dataset, such as bikes, buses, and churches.

Further Reading

There are many papers on the subject, however, one interesting paper provides further comprehensive details for configuring progressive growing GAN models with regard to stability and quality variations:

Title: Progressive Growing of GANs for Improved Quality, Stability, and Variation